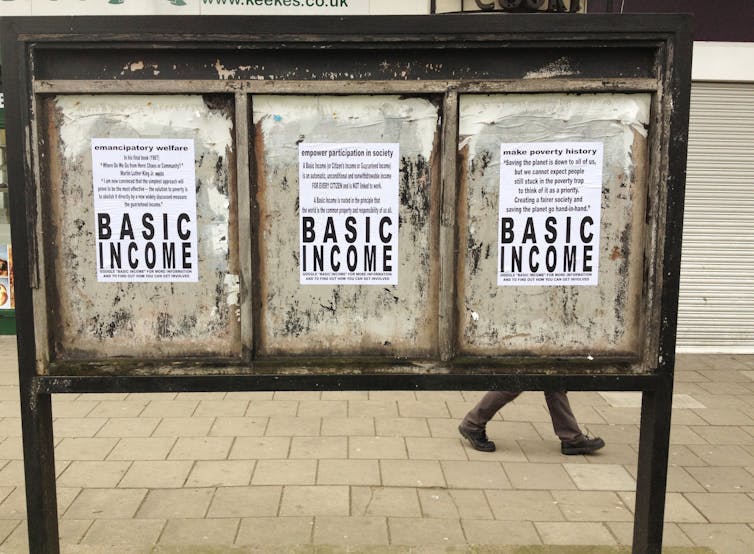

There’s a hue and cry about Doug Ford’s scrapping of Ontario’s basic income project. But the project was a failing experiment with a dearth of high-quality data. Flickr

By Gregory C Mason | August 9. 2018

Social and anti-poverty activists have greeted the dumping of Ontario’s basic income pilot project with the usual lamentations. These critics of the new Conservative government argue the funds allocated to the project is a pittance compared to the costs of poverty, and we have missed out on learning about the impact of a basic income.

I disagree. The pilot needed to stop. As someone who consulted on the project, I believe it had core design flaws that mitigated against ever answering questions about basic income.

These shortcomings do not reflect badly on the team that developed and worked on the project. Rather, the limitations of the pilot reflect the realities of executing social experiments in the modern era as governments make expedient decisions.

Ontario’s pilot project was a negative income tax scheme (which means the government pays some citizens instead of those citizens paying taxes to the government). The project was a direct descendent of the Manitoba Basic Annual Income Experiment, or Mincome, conducted between 1974-78. That project enrolled low-income Manitobans into a rigorous randomized control trial in three areas: Winnipeg, Dauphin and a dispersed rural region.

The findings from Mincome, as well as the other income-maintenance experiments of the 1970s, is that a small work disincentive does exist in the short run (three years). But — and this is critical — no one has measured whether people “slack off” over the longer term. Despite many millions having been spent on income maintenance experiments in North America, we are still clueless about this central question.

The Ontario basic income project attempted to create a random assignment of participants into a treatment and control group, but failed. This is where the realities of modern government and privacy sabotaged the pilot from the very start.

Difficult to find appropriate households

One might imagine that it’s easy to select a sample of low-income households. After all, there are income tax and social assistance records. But three things undermine the use of these records in social experiments.

First, the Canada Revenue Agency (CRA) has always been very careful about sharing tax information. For example, it wasn’t until 2016 that Statistics Canada could add income tax records to census information.. Provinces wishing to use federal income tax data for program administration need to negotiate a data-sharing agreement with CRA.

An anti-poverty activist is led away by police in 2010 in Toronto following an occupation of Liberal Party offices. Activists were protesting welfare cuts, but the province’s basic income project suffered from missing data and needs a redesign. THE CANADIAN PRESS/Chris Young

This was not done prior to the Ontario basic income experiment. In Ontario, once applicants were fully enrolled, they allowed the government to access their tax records. It is not known whether the prospect of granting such access affected the willingness to participate in the first place.

Second, while Ontario has access to records since it levies a personal income tax, conducting such an experiment was not within the mandate of the Ministry of Finance.

A negative income tax is still a tax program, and needs to be delivered by a tax authority, namely the Ministry of Finance. When the Ministry of Finance could not accept responsibility for Ontario’s basic income project, the Ministry of Community and Social Services became the home for the pilot. In principle, this should have opened access to social assistance records, but privacy barriers prevented access to this information as well.

Third and most importantly, many individuals who would qualify for a basic income fly under the administrative radar, because some on social assistance and many low-income households do not file tax returns. So sampling from income tax and social assistance records misses many potentially eligible participants.

Headaches getting Ontario project running

This forced Ontario to use an open enrolment process in the two main sites for the project — Thunder Bay and Hamilton. Enrolment relied on a letter sent to the general population within the two test sites. This failed dramatically.

After the privacy lawyers finished with the introductory materials, the invitation became long, legal and impenetrable. It took more than a month to trim the introductory materials, further delaying enrolment.

Second, it always surprises planners how many low-income individuals miss out on programs that would increase their financial well-being. Everyone involved was astonished at how few people applied to participate in the pilot.

Third, it required extensive support and repeated contact to secure tax and banking information from applicants to finalize their eligibility.

Ontario started mailing invitations in June 2017 and by September, after mailing 37,000 invitations, it had managed to enrol barely 150 participants, well short of the original target of 2,000. This prompted a revised enrolment process that involved direct solicitation through community organizations, which after great effort did manage to raise enrolment.

However, we were a long way from a random allocation of participants into the program, or to having control groups in place.

A dearth of high-quality data

The Ontario pilot also involved a sample of participants steeped in self-selection biases that would have required arcane econometrics to unravel. Such statistical magic requires high-quality data. Unfortunately, the pilot also failed here.

To understand this, it’s important to appreciate that basic income has become the supposed magic bullet of modern public policy. In the 1970s, the main question was: “Will a basic income lower poverty, while not reducing work participation?” Today, basic income is expected to increase health, reduce stress, support increased educational attainment, allow families to move to better homes, increase food security, enhance social connectedness, etc.

Read more:

Basic income: A no-brainer in economic hard times

Furthermore, even though most respondents in the Ontario experiment had access to smartphones, the majority of them preferred to complete a printed questionnaire they needed to mail in.

Anyone familiar with survey methodology understands that such data collection requires persistent follow-up to the point of harassment. A printed questionnaire with conventional mail-back requires the most follow-up; as many as 10 attempts before the respondent is abandoned. Non-response is usually high.

Assault on data quality

The result is that on top of the distorted sample, the information collected to support hypothesis-testing suffered from the participants’ non-response that’s common to all survey data — yet another assault on the data quality of the Ontario pilot.

The design flaws represent institutional and logistical challenges, created partly because no one could foresee the privacy barriers that currently affect information access in government. The Ontario project was attempting, unsuccessfully, to test multiple hypotheses within a complex environment of change.

The result is that no simple story would have emerged from Ontario’s basic income experiment, even under ideal conditions.

Politicians need simple stories. If the project had continued and been able to produce reports based on quality data, it would have contributed much more meaningful heft to the critical debate on guaranteed basic income. And that is truly a missed opportunity.

Gregory C Mason, Associate Professor of Economics, University of Manitoba